apples and Apples

Semi-supervised learning for WSD

Word sense disambiguation (WSD) is a task that consists in determining the correct sense of a particular occurrence of a word that have multiple meanings. An example is the word “bank”, with a usual meaning related to finance and other referring to a slope of grass or earth. Disambiguating word occurrences is a key task for many basic Natural Language Processing (NLP) components, since they assume that there is no ambiguity in an input text. A strategy widely adopted by the methods for this task is to emulate the disambiguation process carried out by humans when reading, this is, by using information about the context of a word occurrence.

I conducted a research project around a classic word sense disambiguation algorithm introduced by Yarowsky. By a close inspection of this algorithm, I identify factors that result relevant for its performance, some of which are only implicitly considered in the original literature where the algorithm is firstly described. The factors are the following:

- strategy for initial labeling,

- types of collocations or evidences,

- consideration of the one-sense-per-discourse heuristics,

- formalization of rule confidence,

- rule confidence threshold,

- allowance for change or removal of a label for an instance, and

- coverage criteria.

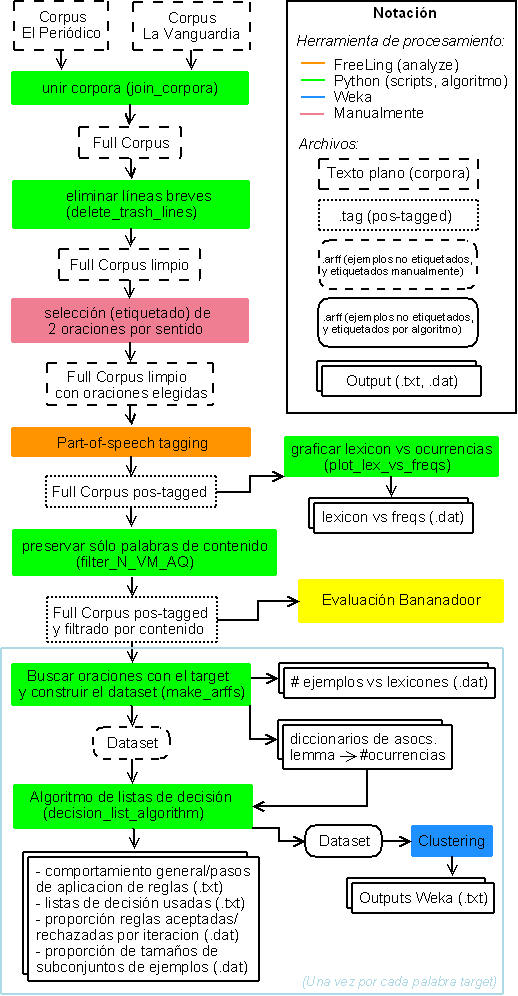

I then propose a lightly supervised version of the decision rule algorithm for WSD, and study the impact of settings for the identified factors. The work makes use of an evaluation strategy based on pseudo-words to show that the proposed approach achieves comparable performance to those of highly optimized versions of the algorithm.

If you are interested in more of this, please read my article, for the technical details about the original algorithm, the identified factors, the proposed version of the method, the evaluation strategy, and the experimental results.