neural type

Deep learning for entity typing

Entities, such as persons, organizations, and locations, are natural units for organizing information. Either represented as articles in semi-structured fashion in knowledge repositories like Wikipedia, or collected in structured knowledge declared in knowledge bases, entities are at the core of the current paradigm of web search, since they can provide not only more focused responses, but often immediate answers.

A characteristic property of an entity is its type. For example, the entity Oslo is of type City. Other types for this entity are Settlement, a more general type than City, and Norwegian city, more specific than City. An entity is assigned one or more types from a type system of reference. Types in a type system are usually arranged in hierarchical relationships, which makes such a system to be known also as type taxonomy or type hierarchy.

Knowledge bases store information about the semantic types of entities, which can be utilized in a range of information access tasks. This information, however, is often incomplete, imperfect, or missing altogether for some entities. In addition, new entities emerging on a daily basis also need to be mapped to one or more types of the underlying type system. In this context, we conduct a research where we address the task of automatically assigning types to entities in a knowledge base from a type taxonomy.

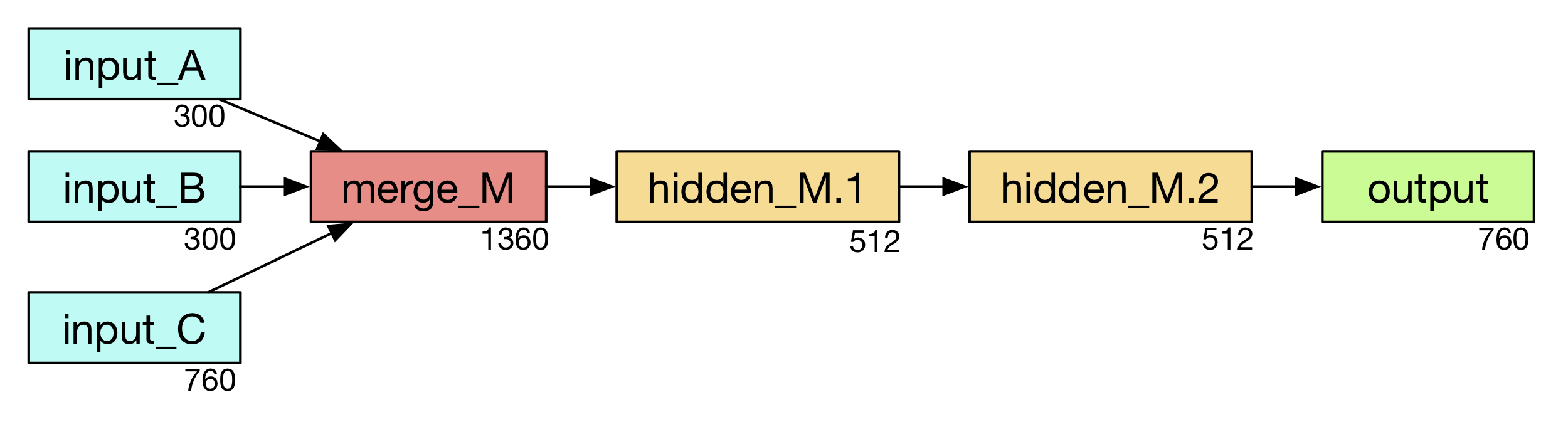

We propose two fully-connected feedforward neural network architectures, and consider different ways to represent an input entity in order to predict a single type label. A first architecture is a simple fully-connected feedforward neural network, and is able to handle different entity vector representations, which are given by the corresponding input components. A merge layer concatenates the available inputs into the hidden layer.

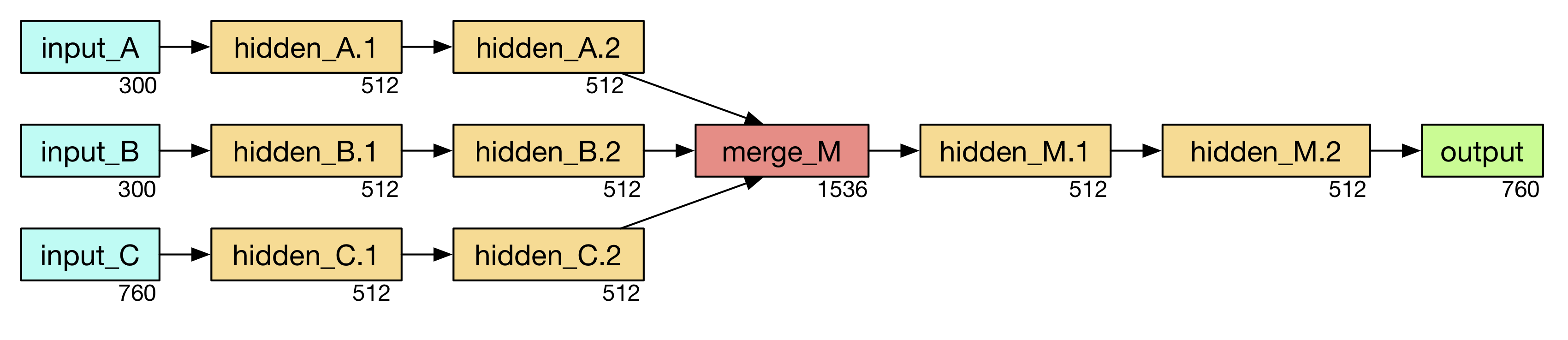

A second architecture, instead, models each input component as firstly fully connected to its own stack of hidden layers. In this way, its depth allows it to better capture each input entity representation, before combining them by vector concatenation.

If you are interested in more of this, please read our article, for the technical details about:

- the proposed neural architectures;

- the two test collections, based on DBpedia, that we created: one focusing on established entities and another focusing on emerging entities;

- our experimental results on comparing among both architectures and the different input representations.