May I suggest you something different?

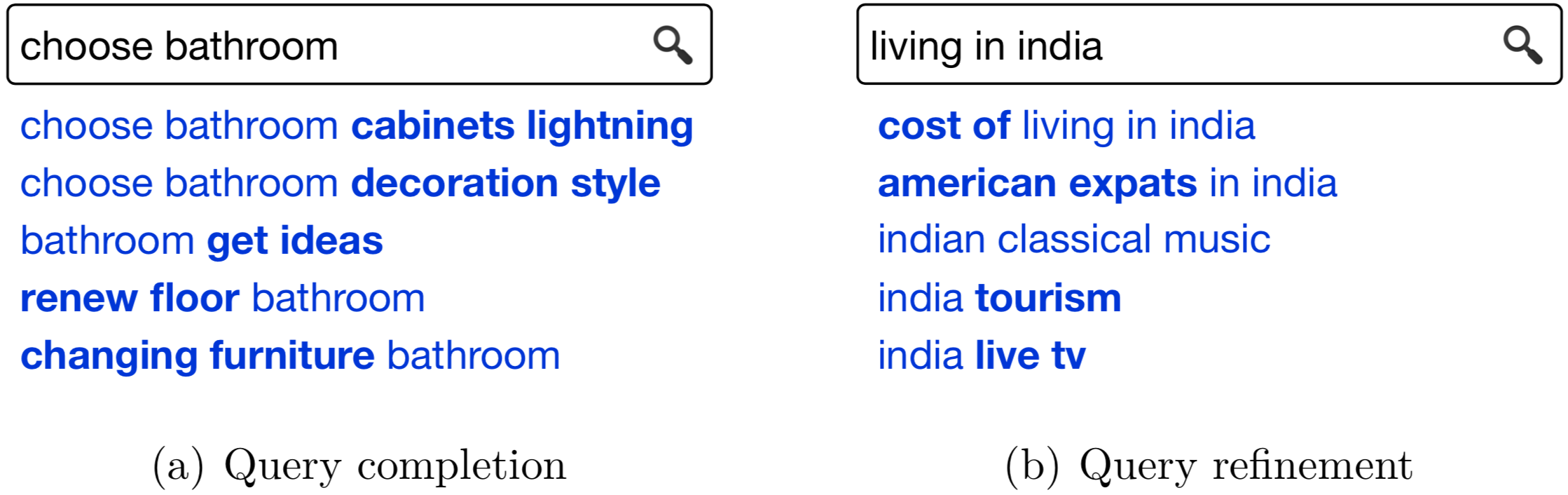

Our second work on query suggestions as a tool for supporting task-based search addresses the same original problem of producing a ranked list of related queries to an initial user input query. As described in the previous post, the query suggestions that our envisaged interface would present actually come in two flavors, query completions and query refinements.

In the context of query suggestions for supporting task-based search, it was, before our work, an open question whether a unified method can produce suggestions in both flavors, or rather specialized models are required. Also, an end-to-end probabilistic generative model that combines keyphrase-based suggestions extracted from multiple information sources relies heavily on query suggestion services from major web search engines. Then, another main challenge is to solve this task without relying on suggestions provided by a major web search engine (and possibly even without using a query log).

Adopting a general two-step pipeline approach, consisting of suggestion generation and suggestion ranking steps, we focus exclusively on the first component. Our aim is to generate sufficiently many high-quality query suggestion candidates. The subsequent ranking step will then produce the final ordering of suggestions by reranking these candidates (and ensuring their diversity with respect to the possible subtasks).

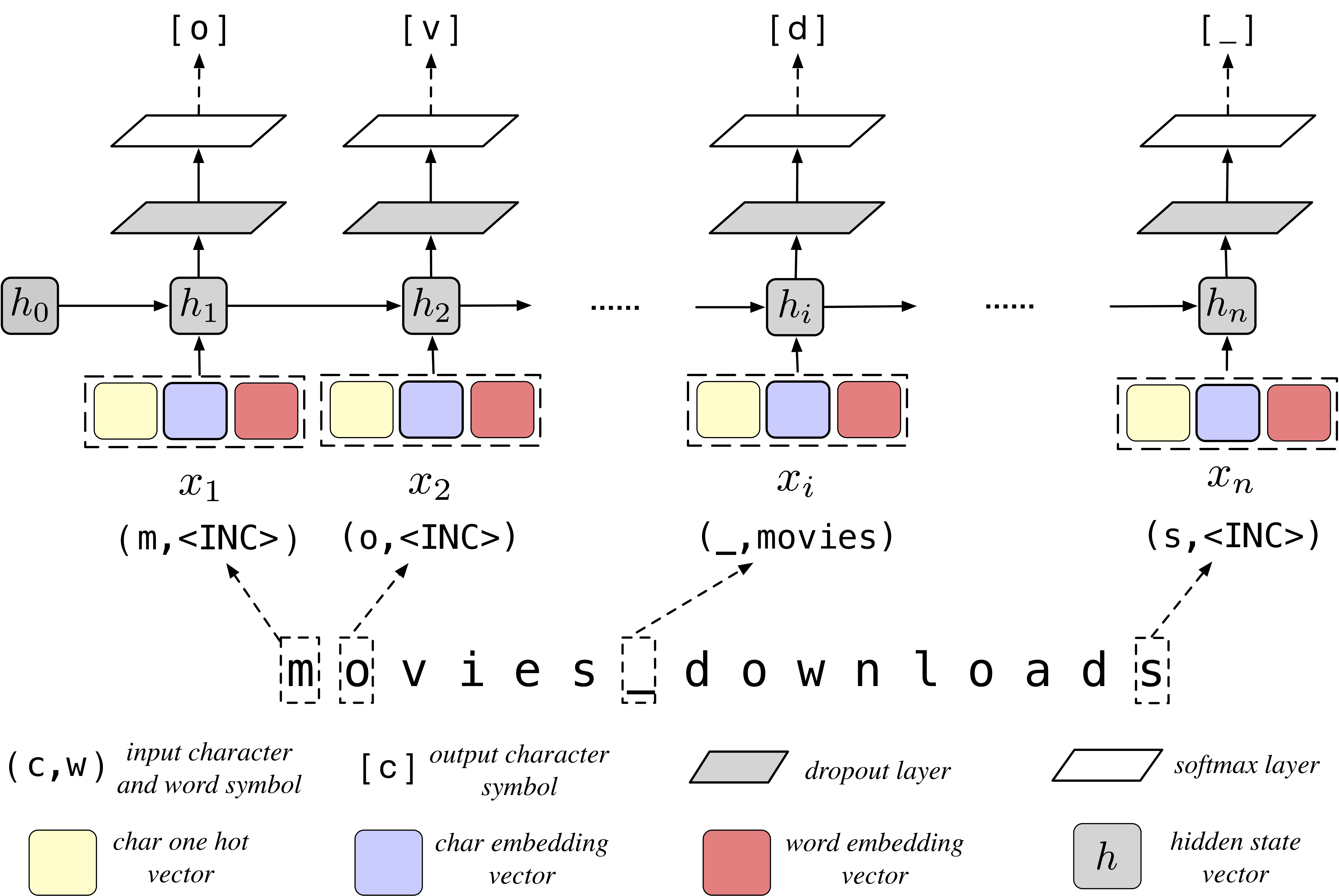

Can existing query suggestion methods generate high-quality query suggestions for task-based search? We study three methods from the literature. The first two methods can only produce query completions, while the third one is able to handle both query completions and refinements. Specifically, we employ a popular suffix method, a neural language model, and a sequence-to-sequence model to generate candidate suggestions.

What are useful information sources for each method? We consider three independent information sources. We are particularly interested in finding out how a task-oriented knowledge base (Know-How) and a community question answering site (WikiAnswers) fare against using a query log (AOL).

Our experimental results lead to the following main observations:

- sequence-to-sequence approach performs best among the tested three methods, and, overall, it can generate suggestions in both query completion and query refinement flavors;

- ur best numbers are similar to that of the Google API, but with this API the number of generated suggestions per query is limited compared to ours;

- regarding the data sources, we observe that, as expected, the query log is the one leading to the highest performance, yet it does not perform well on query refinements;

- overall, we find that different method-source configurations contribute unique suggestions, and thus it is beneficial to combine them.

If you are interested in more of this, please read Sections 6.5 and 6.6 of my thesis Task-Based Support in Search Engines, for technical details of the problem, the terminology, the methodology, and the experimental results and analysis.